Understanding Python Web Scraping for Articles

Category

Oct 9, 2024

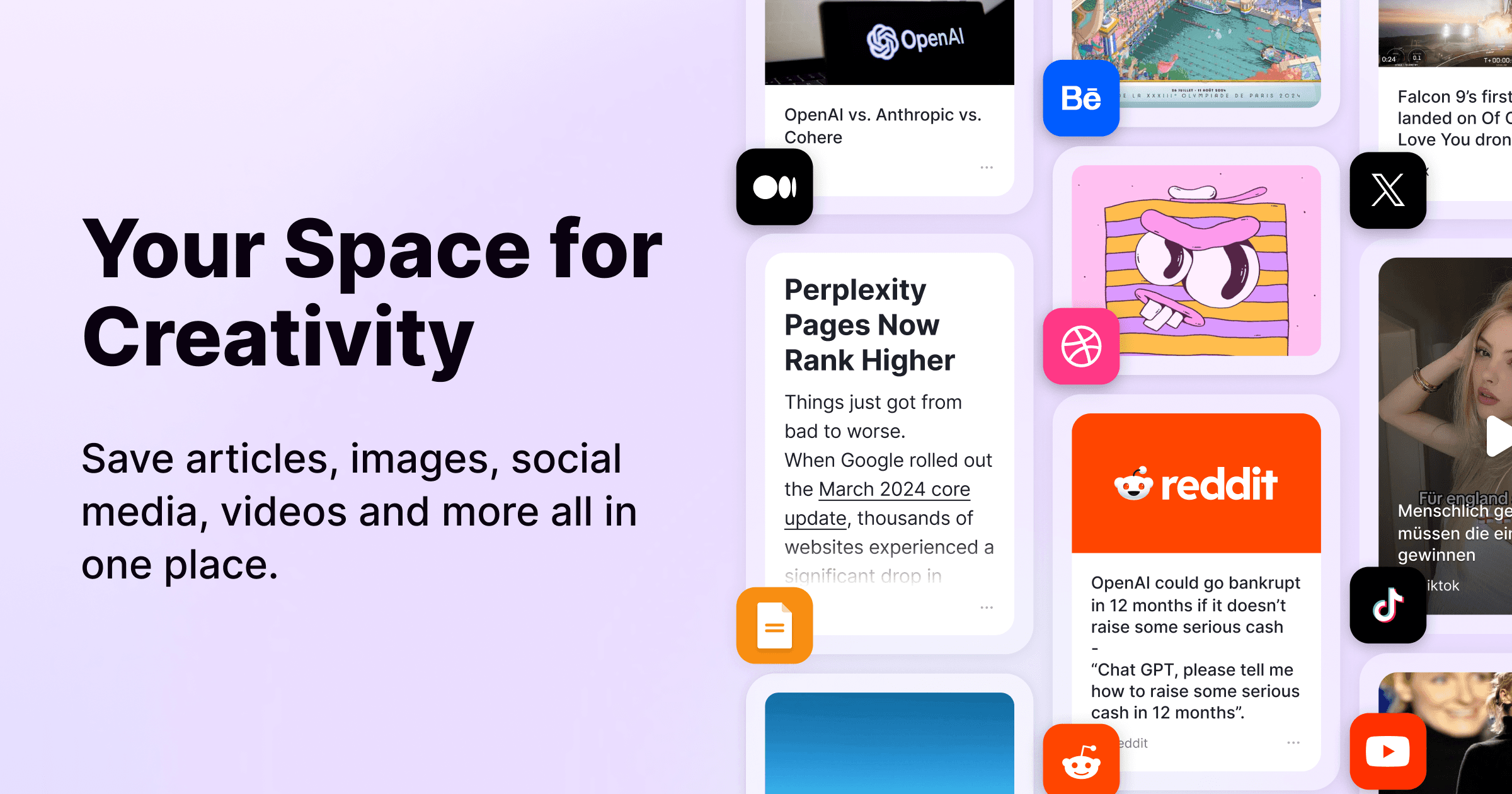

Web scraping is a powerful technique used to extract data from websites, and Python is one of the most popular programming languages for this task. This article will guide you through the process of scraping articles from the web using Python, focusing on essential libraries, best practices, and practical examples.

What is Web Scraping?

Web scraping involves programmatically retrieving data from web pages. It allows users to collect information like text, images, and metadata without manual effort. This technique is particularly useful for SEO professionals, researchers, and marketers who need to gather large amounts of data quickly and efficiently.

Why Use Python for Web Scraping?

Python is favored for web scraping due to its simplicity and the availability of powerful libraries. Key benefits include:

Ease of Use: Python's syntax is straightforward, making it accessible for beginners.

Robust Libraries: Libraries such as BeautifulSoup, Requests, and Scrapy provide tools to handle various aspects of web scraping.

Community Support: A large community means ample resources and documentation are available.

Essential Libraries for Web Scraping

Requests: Used to send HTTP requests to retrieve web pages.

BeautifulSoup: Parses HTML and XML documents, making it easy to navigate and search the parse tree.

Scrapy: A comprehensive framework for building web scrapers that can handle complex tasks.

Installation

To get started, install the necessary libraries using pip:

Step-by-Step Guide to Scrape an Article

1. Import Libraries

First, import the required libraries in your Python script.

2. Send an HTTP Request

Use the Requests library to fetch the content of the webpage.

3. Parse the HTML Content

Once you have the page content, use BeautifulSoup to parse it.

4. Extract Data

Identify the HTML elements containing the data you want to extract (e.g., title, headings, paragraphs).

5. Save or Process Data

You can save the extracted data to a file or process it further as needed.

Best Practices for Web Scraping

Respect Robots.txt: Always check a website's

robots.txtfile to ensure that you are allowed to scrape its content.Limit Requests: Avoid overwhelming a server with too many requests in a short period. Use time delays between requests if necessary.

Handle Exceptions: Implement error handling to manage potential issues like connection errors or missing elements.

Conclusion

Python provides a robust framework for web scraping articles efficiently. By utilizing libraries like Requests and BeautifulSoup, you can automate data collection processes that would otherwise be time-consuming. Whether you're gathering information for SEO purposes or conducting research, mastering Python web scraping opens up numerous possibilities for data analysis and insights.ShareRewrite